Release when ready using bitbucket pipeline, aws and kubernetes

Table of contents

No headings in the article.

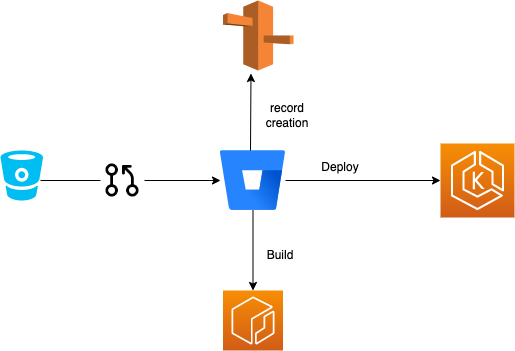

What is RWR ?

RWR ARCHITECTURE

Release when ready is functionality using which developer could test the current pr raised is working fine or not instead of merging it to production branch and then creating a glitch ,Rwr will create a live environment for current pull request and that can be tested as of production environment

To create the same we will need to create bitbucket pipeline and k8s config files and terraform script to create the required resources

1.Create Bitbucket Variables

Depending upon how you have configured your ingress you need to modify the ingress variables as well

List of varibales need to be created:

SERVICENAME

ECR_NAME

AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY

RECORDNAME

ELB_NAME

ELB_HOSTED_ZONE

DEPLOYMENTNAME

BUILDENVVALUE

PORT

NAMESPACE

DEPLOYMENT_REGION

ALB_NAME

INGRESS_CLASS_NAME

SSL_CERT_ARN

MIN_REPLICA_COUNT

MAX_REPLICA_COUNT

2.Create Bitbucket-Pipeline.yml

image: python:3.7.4

pipelines:

pull-requests:

"**":

- parallel:

- step:

image: hashicorp/terraform

script:

- sed -i 's@ECR_NAME@'$DEPLOYMENTNAME-$BITBUCKET_PR_ID'@' terraform.tf

- sed -i 's@AWS_ACCESS_KEY_ID@'$AWS_ACCESS_KEY_ID'@' terraform.tf

- sed -i 's@AWS_SECRET_ACCESS_KEY@'$AWS_SECRET_ACCESS_KEY'@' terraform.tf

- sed -i 's@RECORDNAME@'$DEPLOYMENTNAME-$BITBUCKET_PR_ID'@' terraform.tf

- sed -i 's@ELB_NAME@'$ELB_NAME'@' terraform.tf

- sed -i 's@ELB_HOSTED_ZONE@'$ELB_HOSTED_ZONE'@' terraform.tf

- cat terraform.tf

- terraform init

- terraform plan

- terraform validate

- terraform apply -auto-approve

- step:

services:

- docker

caches:

- pip

- node

script:

- pip3 install awscli

- AWS_KEY="Your Aws Access Key"

- AWS_SECRET="your Aws secret token"

- AWS_REGION="Region in which you need to deploy resources to"

- aws configure set aws_access_key_id "${AWS_KEY}"

- aws configure set aws_secret_access_key "${AWS_SECRET}"

- eval $(aws ecr get-login --no-include-email --region $AWS_REGION | sed 's;https://;;g')

- IMAGE="Copy here the ecr registry id for furthur reference"

- TAG=${BITBUCKET_COMMIT}

- docker build -t $IMAGE:$TAG .

- docker push $IMAGE:$TAG

- sed -i 's@imageid@'$TAG'@' k8s/deployment.yaml

- sed -i 's@DEPLOYMENTNAME@'$DEPLOYMENTNAME-$BITBUCKET_PR_ID'@' k8s/deployment.yaml

- sed -i 's@BUILDENVVALUE@'$BUILDENVVALUE'@' k8s/deployment.yaml

- sed -i 's@NAMESPACE@'$NAMESPACE-$BITBUCKET_PR_ID'@' k8s/deployment.yaml

- sed -i 's@PORT@'$PORT'@' k8s/deployment.yaml

- sed -i 's@DEPLOYMENT_REGION@'$DEPLOYMENT_REGION'@' k8s/deployment.yaml

- sed -i 's@NAMESPACE@'$NAMESPACE-$BITBUCKET_PR_ID'@' k8s/service.yaml

- sed -i 's@SERVICENAME@'$SERVICENAME-$BITBUCKET_PR_ID'@' k8s/service.yaml

- sed -i 's@PORT@'$PORT'@' k8s/service.yaml

- sed -i 's@ALB_NAME@'$LOADBALANCERNAME'@' k8s/ingress.yaml

- sed -i 's@INGRESSNAME@'$INGRESSNAME-$BITBUCKET_PR_ID'@' k8s/ingress.yaml

- sed -i 's@NAMESPACE@'$NAMESPACE-$BITBUCKET_PR_ID'@' k8s/ingress.yaml

- sed -i 's@INGRESS_CLASS_NAME@'$INGRESSCLASSNAME'@' k8s/ingress.yaml

- sed -i 's@HOSTURL@'$DEPLOYMENTNAME-$BITBUCKET_PR_ID'@' k8s/ingress.yaml

- sed -i 's@PORT@'$PORT'@' k8s/ingress.yaml

- sed -i 's@SERVICENAME@'$SERVICENAME-$BITBUCKET_PR_ID'@' k8s/ingress.yaml

- sed -i 's@SSL_CERT_ARN@'$CERTIFICATE_ARN'@' k8s/ingress.yaml

- sed -i 's@DEPLOYMENTNAME@'$DEPLOYMENTNAME-$BITBUCKET_PR_ID'@' k8s/ingress.yaml

- sed -i 's@DEPLOYMENTNAME@'$DEPLOYMENTNAME-$BITBUCKET_PR_ID'@' k8s/hpa.yaml

- sed -i 's@NAMESPACE@'$NAMESPACE-$BITBUCKET_PR_ID'@' k8s/hpa.yaml

- sed -i 's@MIN_REPLICA_COUNT@'$MIN_REPLICA_COUNT'@' k8s/hpa.yaml

- sed -i 's@MAX_REPLICA_COUNT@'$MAX_REPLICA_COUNT'@' k8s/hpa.yaml

- sed -i 's@NAMESPACE@'$NAMESPACE-$BITBUCKET_PR_ID'@' k8s/namespace.yaml

- cat k8s/deployment.yaml

- cat k8s/ingress.yaml

- cat k8s/service.yaml

- cat k8s/hpa.yaml

- cat k8s/namespace.yaml

- aws eks update-kubeconfig --region $AWS_REGION_VALUE --name $EKS_CLUSTER_NAME

- pipe: atlassian/aws-eks-kubectl-run:2.2.0

variables:

AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY_ID}

AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_ACCESS_KEY}

AWS_DEFAULT_REGION: "Region of eks clutser"

CLUSTER_NAME: "eks cluster name "

KUBECTL_COMMAND: "apply"

RESOURCE_PATH: "k8s/."

DEBUG: "true"

3.Create Terraform script to create resources

provider "aws" {

region = "Region of resources"

access_key = "AWS_ACCESS_KEY_ID"

secret_key = "AWS_SECRET_ACCESS_KEY"

}

resource "aws_ecr_repository" "ECR_NAME" {

name = "ECR_NAME"

image_tag_mutability = "MUTABLE"

image_scanning_configuration {

scan_on_push = true

}

}

resource "aws_route53_record" "RECORDNAME" {

zone_id = "ZONEID"

name = "RECORDNAME.ABC.com"

type = "A"

alias {

name = "ELB_NAME"

zone_id = "ELB_HOSTED_ZONE"

evaluate_target_health = true

}

}

4.Create Kubernetes configuration files

happy coding!!